- USING GPU WITH DOCKER ON KUBERNETES INSTALL

- USING GPU WITH DOCKER ON KUBERNETES DRIVERS

- USING GPU WITH DOCKER ON KUBERNETES UPGRADE

- USING GPU WITH DOCKER ON KUBERNETES SOFTWARE

However, I have not been able to get GPUs show up as schedule-able ressources yet (2).

USING GPU WITH DOCKER ON KUBERNETES UPGRADE

With the Kubernetes Upgrade beyond 1.20 and docker being removed, I found that the preferred installation now uses the “GPU Operator” according to (1). Our Kubernetes-Jobs are scheduled based on percentage of GPU required (just like it is available for CPUs and memory). We have previously used the “Nvidia-device-plugin” which adds GPUs as a ressource to Kubernetes. AWS p3/p2 instances seem to work fine.Is this the correct place to ask a technical question? GPU operator CUDA validator init containers cannot start on AWS g4dn.xlarge node, with error all CUDA-capable devices are busy or unavailable. This is because GKE limits consumption of this priority class by default. GPU operator pods cannot spin up on GKE cluster in namespace gpu-operator-resources due to pod priority issue, with message Error creating: insufficient quota to match these scopes. As of time of writing, the only reference I could find was an issue on the gpu-monitoring-tools repo.

USING GPU WITH DOCKER ON KUBERNETES SOFTWARE

Make sure the software used is up to date in particular, note the difference between the deprecated gpu-monitoring-tools, the standalone dcgm, and the GPU operator.ĭCGM on GKE cluster does not feature pod or container information in metrics. For example, the NVIDIA GPU telemetry guide for Kubernetes at the time of writing asks to observe DCGM_FI_DEV_GPU_UTIL to verify the dcgm is working, despite it being disabled by default.

USING GPU WITH DOCKER ON KUBERNETES INSTALL

With how quickly these tools are changed, this can mean problems arising out of otherwise very clear install processes. But running even a single node with a requestable GPU can cost upwards of several hundred or even several thousand USD monthly. Tutorials online often feature outdated information. If you already have a Docker image with the NVIDIA runtime configured, you can have it deployed on a cluster in minutes. To that end, I have included a list of some of the issues I faced. This post is written to help users set up the software needed to use these metrics. Since there are four GPUs on each node, there will be 28 1g.5gb GPU partitions available on each node.

For example, consider a node pool with two nodes, four GPUs on each node, and a partition size of 1g.5gb.GKE creates seven partitions of size 1g.5gb on each GPU. The state of NVIDIA GPU metrics and monitoring in Kubernetes is rapidly changing and often not well documented, both in an official capacity as well as on other common troubleshooting channels (GitHub, StackOverflow). Each GPU on each node within a node pool is partitioned the same way. Kubecost integrates with NVIDIA DCGM metrics to offer further insight into GPU-accelerated workloads, including cost visibility and identification of overprovisioned GPU resources. With the GPU Operator emitting utilization metrics, you can leverage this data to perform more robust operations like cost analysis using Kubecost. GPU acceleration is a rapidly evolving field within Kubernetes. Kubectl -n gpu-operator-resources port-forward service/nvidia-dcgm-exporter 8080:9400Īnd navigating to localhost:8080/metrics Conclusion On a node with a GPU, run kubectl describe node and check if the /gpu resource is allocable: It is possible this DaemonSet is installed by default-you can check by running kubectl get ds -A. To handle the /gpuresource, the nvidia-device-plugin DaemonSet must be running. Requesting GPUsĪlthough the syntax for requests and limits is similar to that of CPUs, Kubernetes does not inherently have the ability to schedule GPU resources.

This article will explore the use of GPUs in Kubernetes, outline the key metrics you should be tracking, and detail the process of setting up the tools required to schedule and monitor your GPU resources. Additionally, there is no native way to determine utilization, per-device request statistics, or other metrics-this information is an important input to analyzing GPU efficiency and cost, which can be a significant expenditure. However, Kubernetes does not inherently have the ability to schedule GPU resources, so this approach requires the use of third-party device plugins. Details: Tried removing -disable-gpu flag in headless chrome. Once the cluster has started, the next step is to log into each.

USING GPU WITH DOCKER ON KUBERNETES DRIVERS

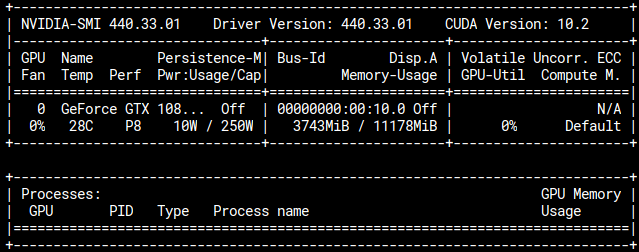

Step 2: Install the GPU drivers on each cluster node. The first step is to launch a GPU-enabled Kubernetes cluster. Using GPUs with Kubernetes allows you to extend the scalability of K8s to ML applications. Using GPUs with Kubernetes Step 1: Start a GPU-enabled cluster. If you’re familiar with the growth of ML/AI development in recent years, you’re likely aware of leveraging GPUs to speed up the intensive calculations required for tasks like Deep Learning. Monitoring NVIDIA GPU Usage in Kubernetes with Prometheus

0 kommentar(er)

0 kommentar(er)